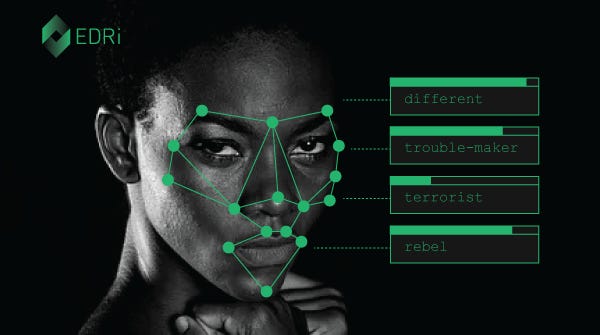

Black women face highest risk of being wrongly linked to crime by police facial recognition

These errors mean Black women with no connection to crime are more likely, than anyone else, to be drawn into police investigations under such circumstances

Black women are the group most likely to be falsely flagged by police facial recognition technology, according to a government-commissioned report.

The findings emerge from an independent assessment by the National Physical Laboratory (NPL) of the facial recognition software used on the Police National Database.

The evaluation found stark disparities in error rates across race and gender, with Black women consistently experiencing the poorest outcomes of all ethnic groups at commonly used confidence levels.

Almost one in ten Black women (9.9%) were falsely matched by the system, compared with 0.4% of Black men, the study shows. The false positive rate fell to 0.1% for white women and 0.0% for white men.

Reacting to the findings, Charlie Whelton, policy and campaigns officer at Liberty, a leading campaigning group, said the data demonstrated the harm caused by the use of facial recognition without adequate safeguards.

“The racial bias in these statistics shows the damaging real-life impacts of letting police use facial recognition without proper safeguards in place,” he told Black Current News.

“With thousands of searches a month using this discriminatory algorithm, there are now serious questions to be answered over just how many people of colour were falsely identified, and what consequences this had.

“Last week the Home Office started a consultation to give the public a say in how this facial recognition is used, but even this was disappointingly accompanied by a pledge to ramp up use.”

He added: “This report is yet more evidence that this powerful and opaque technology cannot be used without robust safeguards in place, including real transparency and meaningful oversight.”

While the findings were published earlier this month, the disproportionate impact on Black women has received little attention in public debate.

It also emerged that police forces were aware of racial bias in the Police National Database facial recognition system for more than a year before the findings were made public.

Facial recognition technology compares images of people’s faces against police watchlists of known or wanted individuals. It can be used on live camera footage in public spaces or retrospectively, by running images through police, passport or immigration databases to help identify suspects.

Previously, Liberty’s investigative reporting into police use of facial recognition found that forces have been conducting searches on the Police National Database since 2019.

By the end of 2024, more than 527,000 searches had been carried out, pointing to growing reliance on the technology. That figure is now likely to have exceeded 750,000, based on the Home Office’s assertion that around 25,000 searches are taking place each month, prompting warnings that tens of thousands of people may already have been incorrectly flagged.

So: these misidentifications are significant and potentially devastating. False positives occur when an individual is wrongly matched to a police image despite having no connection to an offence, potentially placing them under suspicion or investigation.

Black communities are already overrepresented at multiple stages of the criminal justice system, including stop and search, arrest and imprisonment. Campaigners argue that the introduction of flawed facial recognition technology risks compounding existing inequalities.

While police guidance requires officers to manually review matches, civil liberties groups maintain that algorithmic outputs can still influence decision-making, particularly in high-volume or time-pressured environments.

The government has launched a 10-week public consultation on expanding police use of facial recognition, including proposals to allow access to additional databases such as passport and driving licence records.

Officials are also working on a new national system that would hold millions of facial images, after Sarah Jones, the policing minister, described the technology as the “biggest breakthrough since DNA matching”.

Although the Home Office has acknowledged that some demographic groups are more likely to be incorrectly included in facial recognition search results, critics say the data points to a specific and persistent risk for Black women that remains insufficiently addressed.

A Home Office spokesperson said: “The Home Office takes the findings of the report seriously and we have already taken action.

“A new algorithm has been independently tested and procured, which has no statistically significant bias. It will be tested early next year and will be subject to evaluation.

“Given the importance of this issue, we have also asked the police inspectorate, alongside the forensic science regulator, to review law enforcement’s use of facial recognition.”

Black Current News has asked the government to respond specifically to the disparity affecting Black women.